AMD seems to have the upper hand compared to Nvidia when it comes to memory latency performance with current-gen graphics cards.

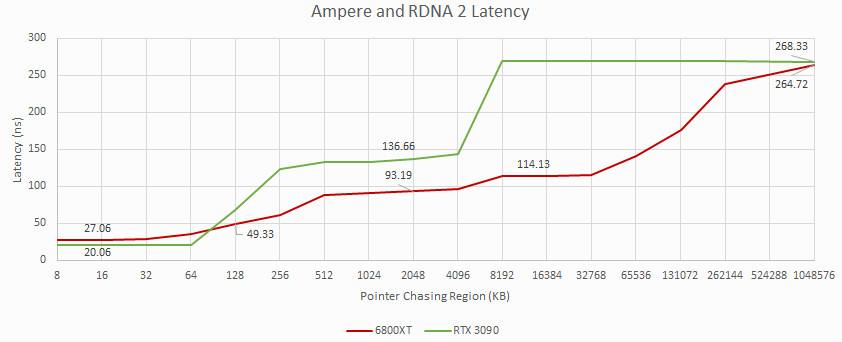

This conclusion was drawn by tech site Chips and Cheese which ran comparisons between Big Navi and Ampere, using pointer chasing benchmarks (written in OpenCL) to assess memory latency in nanoseconds (ns) with AMD’s RX 6800 XT pitted against Nvidia’s RTX 3090.

- AMD vs Nvidia: which should be your next graphics card?

- Where to buy Nvidia RTX 3080: find stock here

- We'll show you how to build a PC

And the results were interesting to say the least, when you consider that the Big Navi card has more cache layers to travel through on the journey to memory – yet it manages to match the Ampere GPU.

Nvidia runs with a straightforward scheme of two levels of cache – L1 and L2 – cache being the tiny amounts of very fast on-board storage (much faster than the actual video RAM) right there on the chip (just as is the case with CPUs).

With Big Navi, however, AMD does things very differently, using multiple layers of cache: L0, L1, L2 and Infinity Cache (which is effectively L3). Meaning there are more levels to pass through, as we mentioned, so you’d think that this could slow things down more in terms of latency – but that’s not the case.

In fact, AMD’s cache is impressively quick indeed, with latency being low through all those multiple layers, whereas Nvidia has a high L2 latency – 100ns, compared to around 66ns for AMD from L0 to L2. The upshot of all this is that the overall result is pretty much a dead heat.

Amazing feat

As Chips and Cheese observes: “Amazingly, RDNA 2’s [Big Navi] VRAM latency is about the same as Ampere’s, even though RDNA 2 is checking two more levels of cache on the way to memory.”

The site points out that AMD’s speedy L2 and L3 caches with their very low latency could give Big Navi GPUs the edge in less demanding workloads, and that this might explain why RX 6000 graphics cards do very well at lower resolutions (where the GPU isn’t being pushed nearly as hard).

Certainly, that’s a tentative conclusion, and we need to be careful around reading too much into any one single test. Furthermore, it’s unclear how any such memory latency advantage might translate into real-world performance in games anyway.

Chips and Cheese also notes that memory latency with CPUs is far quicker, of course, but that comparatively “GDDR6 latency itself isn’t so bad” on an overall level.

Via Tom’s Hardware

from TechRadar: computing components news https://ift.tt/3egb6gy

via IFTTT

No comments:

Post a Comment