While last year was a relatively quiet one for AMD – well, as quiet as things get for a tech behemoth which is a huge presence in the CPU and GPU worlds – 2022 was very different in terms of being a year of major next-gen launches. We witnessed the arrival of the long-awaited Zen 4 processors, and also RDNA 3 graphics cards, plus more besides.

AMD had a lot to live up to, though, in terms of rival next-gen launches from Intel and Nvidia respectively, and Team Red certainly ran into some controversies throughout the year, too. Let’s break things down to evaluate exactly how AMD performed over the past 12 months.

CPUs: V-Caching in, and Ryzen 7000 versus Raptor Lake

In the CPU space, AMD’s first notable move of 2022 was to unleash its new 3D V-Cache tech, launching an ‘X3D’ processor in April that was aimed at gamers. The Ryzen 7 5800X3D was hailed as a great success, and indeed really did beef up performance levels with gaming for the 8-core CPU, as intended.

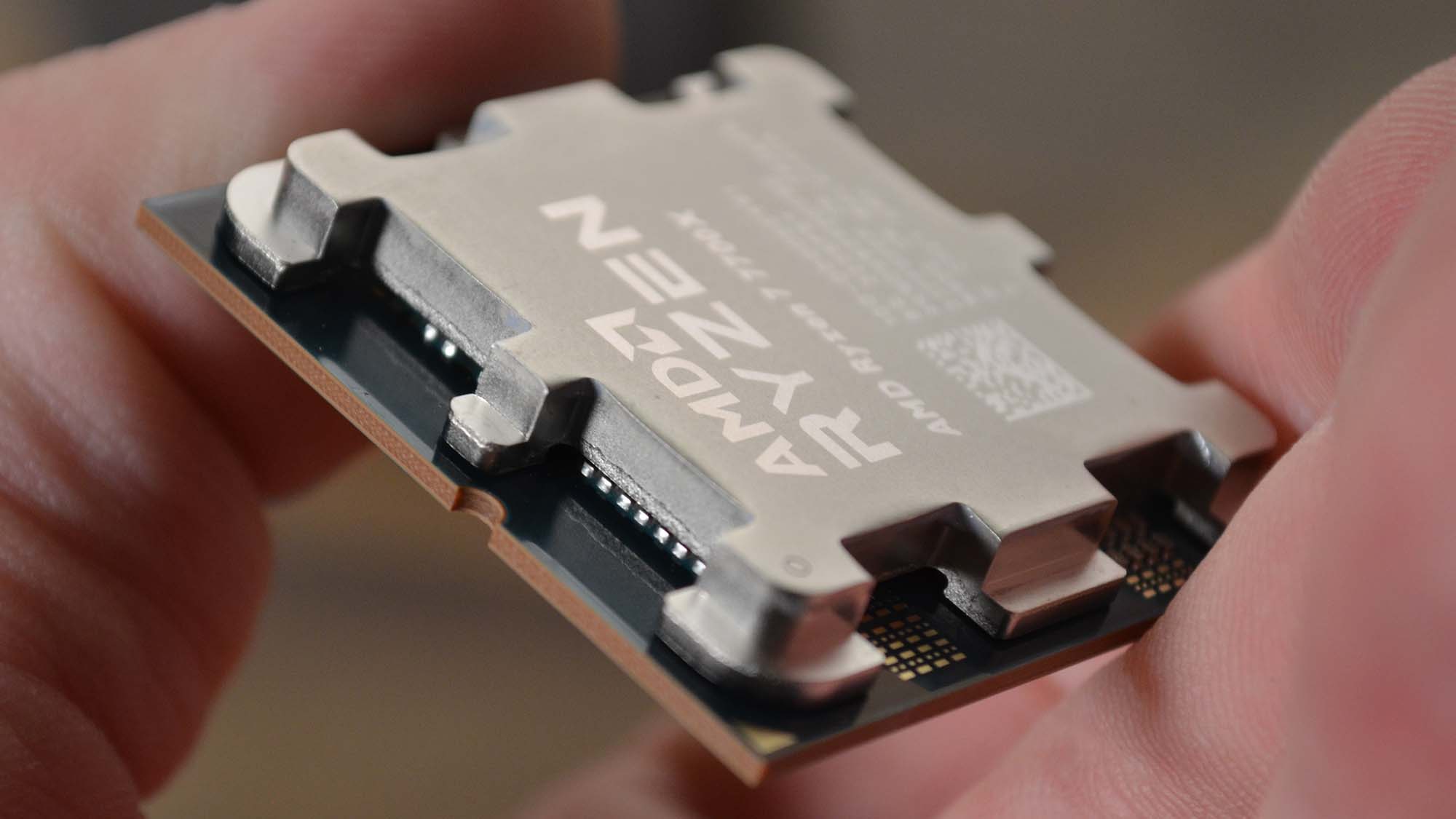

Of course, this wasn’t the big news for 2022 – that was the arrival of a new range of Ryzen 7000 processors based on Zen 4. This was an entirely new architecture, complete with a different CPU socket and therefore the requirement to buy a new motherboard (along with a shift to support DDR5 RAM, bringing the firm level with Intel on that score).

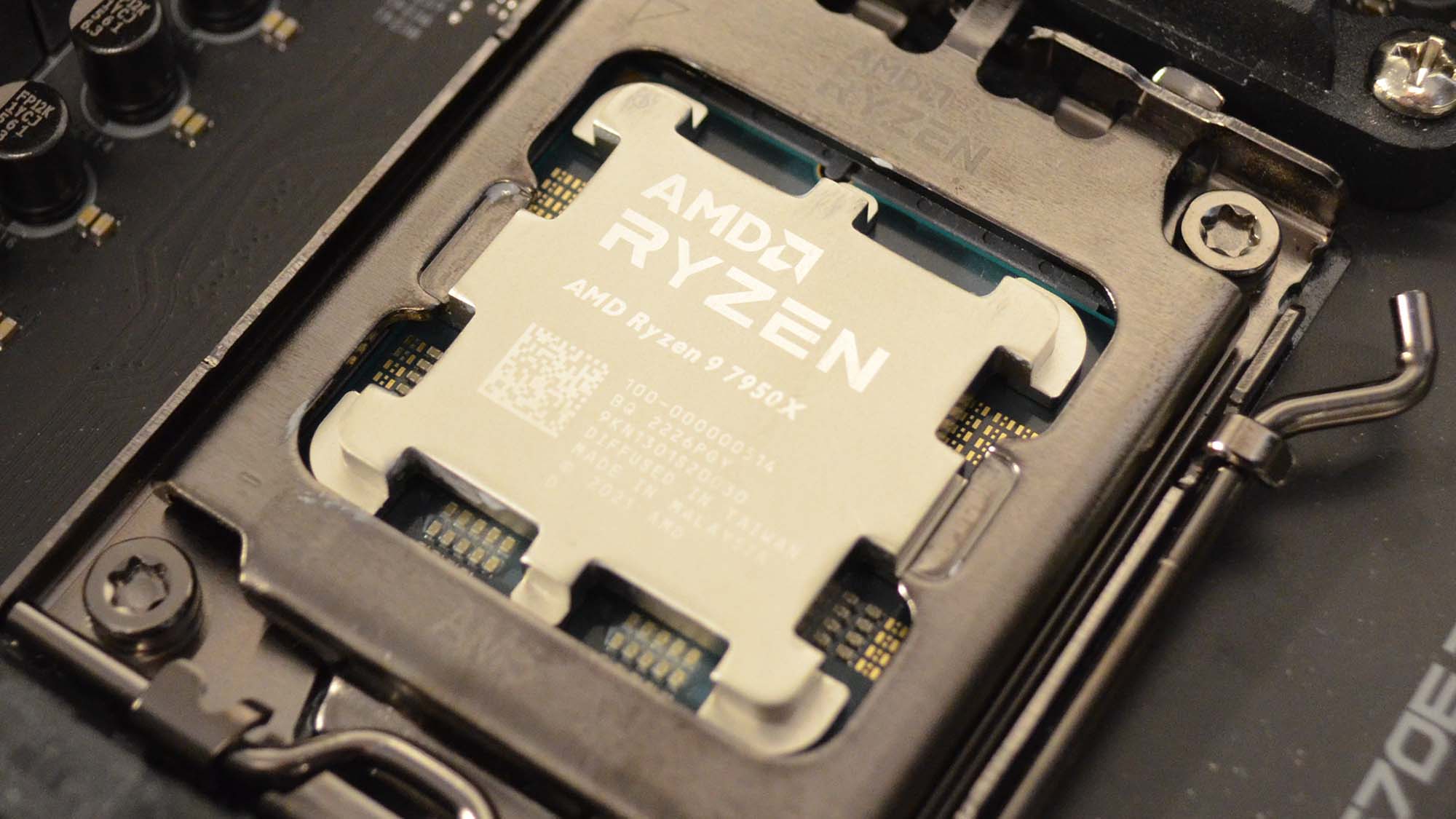

The initial Zen 4 chips were the Ryzen 5 7600X, Ryzen 7 7700X, and the high-end Ryzen 9 7900X and Ryzen 9 7950X, all of which hit the shelves in September. The flagship 7950X stuck with 16-cores, as with the previous generation, but that’s plenty enough to be honest, and as we observed in our review, this CPU represented a superb piece of silicon, and a huge leap in performance over the 5950X.

However, the Zen 4 top dog soon ran into a problem: Intel’s Raptor Lake flagship emerged just a month later, proving to have considerably more bite than the 7950X. In actual fact, performance-wise, there wasn’t much between the Core i9-13900K and Ryzen 7950X, except the Intel CPU was somewhat faster at gaming – the real problem for AMD was pricing, with Team Blue’s chip to be had for considerably less (a hundred bucks cheaper, in fact).

Compounding that was the situation of AMD’s new AM5 motherboards being more expensive than Intel’s LGA 1700 boards, plus buyers on the AMD side had to purchase DDR5 RAM, as DDR4 wasn’t compatible – but with Raptor Lake, DDR4 was an option. And the latter memory is a fair bit cheaper, for really not all that much difference in performance (at least not in these early days for DDR5 – that will change in the future, of course).

All of this added up to a problem in terms of value proposition for Ryzen 7000 compared to Raptor Lake, not just at the flagship level, but with the mid-range Zen 4 chips, too. Indeed, the added motherboard and RAM costs made themselves even more keenly felt with a more modestly-targeted PC build, so while the Ryzen 7600X and Intel’s 13600K were well-matched in performance terms (with a little give and take), the latter was a less expensive upgrade.

It soon became clear enough that when buyers were looking at Zen 4 versus Raptor Lake late in 2022, AMD’s product was falling behind in sales due to the aforementioned cost factors.

Hence Team Red made an unusual move, slashing the price of Ryzen 7000 CPUs during Black Friday, and keeping those discounts on as a ‘Holiday promotion’ afterwards. That kind of price-cutting is not normally something witnessed with freshly launched CPUs, and it was an obvious sign that AMD needed to stoke sales. Even as we write this, the 7600X still carries a heavy discount, meaning it’s around 20% cheaper than the 13600K – and clearly a better buy with that relative pricing.

AMD’s actions here will presumably have fired up sales considerably as 2022 rolled to an end (we’d certainly imagine, though we haven’t seen any figures yet). And while these price cuts aren’t permanent, more affordable motherboards should be available soon enough in 2023, taking the price pressure off elsewhere. Add into the mix that new X3D models are coming for Zen 4 which should be excellent for gaming – and rumor has it they should be revealed soon at CES, with multiple versions this time, not just one CPU – and AMD looks like it’s going to be upping the ante considerably.

In summary, while Zen 4 got off to a shaky start sales-wise, with Raptor Lake pulling ahead, AMD swiftly put in place plans to get back on track, so it could yet marshal an effective enough recovery to be more than competitive with Intel’s 13th-generation – particularly if those X3D chips turn up as promised. Mind you, Intel does still have cards up its own sleeve – namely some highly promising mid-range CPUs, and that 13900KS beast of a flagship refresh – so it’s still all to fight for in the desktop processor world, really.

RDNA 3 GPUs arrive to applause – but also controversy

Following a mid-year bump for RX 6000 models led by a refreshed flagship, the RX 6950 XT – alongside an RX 6750 XT and 6650 XT – the real consumer graphics showpiece for AMD in 2022 was when its next-gen GPUs landed. Team Red revealed new RDNA 3 graphics cards in November, and those GPUs hit the shelves in mid-December.

The initial models in the RDNA 3 family were both high-end offerings based on the Navi 31 GPU. The Radeon RX 7900 XTX and 7900 XT used an all-new chiplet design – new for AMD’s graphics cards, that is, but already employed in Ryzen CPUs – meaning the presence of not just one chip, but multiple dies. (A graphics compute die or GCD, alongside multiple memory cache dies or MCDs).

We loved the flagship RX 7900 XTX, make no mistake, and the GPU scored full marks in our review, proving to be a more than able rival to the RTX 4080 – at a cheaper price point.

Granted, there were caveats with the 7900 XTX being slower than the RTX 4080 in some ways, most notably in ray tracing performance. AMD’s ray tracing chops were greatly improved with RDNA 3 compared to RDNA 2, but still remained way off the pace of Nvidia Lovelace graphics cards. Nvidia’s new GPUs also proved to offer much better performance with VR gaming, and creative apps to boot. But for gamers who weren’t fussed about ray tracing (or VR), the 7900 XTX clearly offered a great value proposition (partly because RTX 4080 pricing seemed so out of whack, mind you).

As for the RX 7900 XT, that GPU rather disappointed – like the RTX 4080, it was very much regarded as the lesser sibling in the shadow of its flagship. That didn’t mean it was a bad graphics card, just that being priced only slightly more affordably than the XTX variant – just a hundred bucks less – the XT didn’t work out nearly as well in terms of comparative performance.

The 7900 XTX suffered from its own problems, though, partly due to its popularity, and quickly becoming established as the GPU to buy versus the RTX 4080, or indeed the 7900 XT (for the little bit of extra outlay, the XTX clearly outshone it). Stock vanished almost immediately, and as we write this, you still can’t find any; except via scalpers on eBay (predictably). Those auction prices are ridiculous, too, and indeed you could pretty much get yourself an RTX 4090, never mind 4080, for some of the eBay price tags floating around at the time of writing.

The stock situation wasn’t as bad for the RX 7900 XT, with models available as we write this, but still, many versions of this graphics card have sold out, and some of the ones hanging around are pricier third-party models (which obviously suffer further on the value proposition front).

Part of the reasons for stock being initially sparse pertained to some card makers not having had all their models ready to roll mid-December – indeed, MSI didn’t launch any RDNA 3 cards at all (and may not have custom third-party boards on shelves until Q1 2023, or so the rumor mill theorized).

Aside from availability – which is, let’s face it, hardly an unexpected issue right out of the gate with the launch of a new GPU range – there was another wobble around RDNA 3 that AMD had to come out and defend itself against in the first week of sales.

This was the speculation that RX 7000 GPUs had been pushed out with hardware functionality that didn’t work, and were ‘unfinished’ silicon – unfair criticisms, in our opinion, and AMD’s too, with Team Red quickly debunking those theories.

What did still raise question marks elsewhere, however, was some odd clock speed behavior, and more broadly, the idea that the graphics driver paired with the RDNA 3 launch wasn’t up to scratch. In this case, the rumor mill floated more realistic sounding theories: that AMD felt under pressure to get RX 7000 GPUs out in its long-maintained timeframe of the end of 2022, only just squeaking inside that target, in order to make sure Nvidia didn’t enjoy the Holiday sales period uncontested (and to keep investors happy).

The same rumor-mongers noted, however, that AMD is likely to be able to improve drivers in order to extract 10% better performance, or maybe more, with headroom for quick boosts on the software front that really should have been in the release driver.

All of that is speculation, but it’ll be good news for 7900 XTX and XT buyers if true – because their GPUs will quickly get better, and make the RTX 4080 look an even shakier card. Indeed, we may even see the 7900 XTX become a much keener rival for the 4090, rather than the repositioning AMD engaged in at a late pre-launch stage, pitting the GPU as an RTX 4080 rival. (Which in itself, perhaps, suggests that Team Red expected driver work to be further forward than it actually ended up being for launch – and that these gains will be coming soon enough).

Even putting that speculation aside, and forgetting about potentially swift driver boosts, AMD’s RDNA 3 launch still went pretty well on the whole, with the flagship 7900 XTX coming off really competitively against Nvidia – performing with enough grunt to snag the top spot in our ranking of the best GPUs around. Even if the 7900 XT did not hit those same heights...

And granted, the controversies around RDNA 3 and hardware oddities didn’t help, but these felt largely overblown to us, and the real problem was that ever-present bugbear of not enough stock volume with the initial launch. Hopefully the supply-demand imbalance will be corrected soon enough (maybe even by the time you read this).

The GPU price is right (or at least far more reasonable)

One of the big positives of 2022 was the supply of graphics cards correcting itself, and the dissipation of the GPU stock nightmare that had haunted gamers for so long.

Early in the year, prices started to drop from excruciatingly high levels, and by mid-2022, they were starting to normalize. The good news for consumers was that AMD graphics cards – RX 6000 models – fell more quickly in price than Nvidia GPUs, and towards the end of the year, there were some real bargains (on Black Friday in particular) to be had for those buying a Radeon graphics card.

Of course, a lot of this was down to the proximity of the next-gen GPU reveals, and the need to get rid of current-gen stock as a result. But there’s no arguing about the fact that it was a blessed relief at the lower-end of the market for folks to be able to pick up an RX 6600 or 6650 XT for not much more than $200 in the US for the former (and as little as $250 for the latter at some points).

It was a good job these price drops happened, though, because like Nvidia, AMD’s GPU launches in 2022 did little to help the lower-end of the market. AMD released the aforementioned RX 6650 XT in May, but the graphics card was woefully overpriced in the mid-range initially (at $400, which represented terrible value). Offerings like the RX 6500 XT and RX 6400 further failed to impress, with the former being very pricey for what it was, and while the latter did carry a truly wallet-friendly price tag, it was a shaky proposition performance-wise.

These products were generally poorly received, so let’s hope next year brings something better at the low-end from RDNA 3, eventually. Otherwise both AMD and Nvidia are really leaving the door open for Intel to carve a niche in the budget arena (with Team Blue’s Arc graphics drivers undergoing solid improvements as time rolls on).

FSR 2.0: AMD switches to temporal

Not to be beaten by Nvidia DLSS, AMD pushed out a new version of its frame rate boosting FSR (FidelityFX Super Resolution) in May 2022. FSR 2.0 brought temporal upscaling into the mix – which is what DLSS uses, albeit AMD doesn’t augment it with AI unlike Nvidia’s tech – and the results were a huge improvement on the first incarnation of FSR (which employed spatial upscaling).

It was an important step for AMD to take in terms of attempting to keep level with Nvidia in the graphics whistles-and-bells department (even if Team Red couldn’t do that with ray tracing, as already noted, with its new RDNA 3 GPUs).

Support is key with these technologies, of course, and in December, AMD reached a milestone with 101 games now supporting FSR 2.0, with that happening in just over half a year. DLSS 2.0 may support more games, at 250 going by Nvidia’s latest figure, but that includes some apps, and has only risen by 50 in the past six months (it was 200 back in July).

So, AMD’s overall rate of adoption looks to be twice as fast, or thereabouts, backing up promises Team Red made about FSR 2.0 being easier to quickly add to games – which bodes well for future acceleration of those numbers of supported titles.

Of course, Nvidia just unleashed DLSS 3.0 – as an RTX 4000 GPU exclusive, mind, and with vanishingly few titles right now – and so AMD revealed it’s working on FSR 3.0, too. Team Red told us that it uses tech similar to DLSS 3.0 and Nvidia’s Frame Generation, and could double frame rates (best-case scenario).

But with any details on FSR 3.0 seriously thin on the ground, it felt like a rushed announcement and rather a case of keeping up with the Joneses (or the Jensen, perhaps). Which doesn’t mean it won’t be great (or that it will), but with a vague ‘coming in 2023’ release date, we’re not likely to find out soon, and FSR 3.0 is probably still a long way off.

Threadripper goes All-Pro

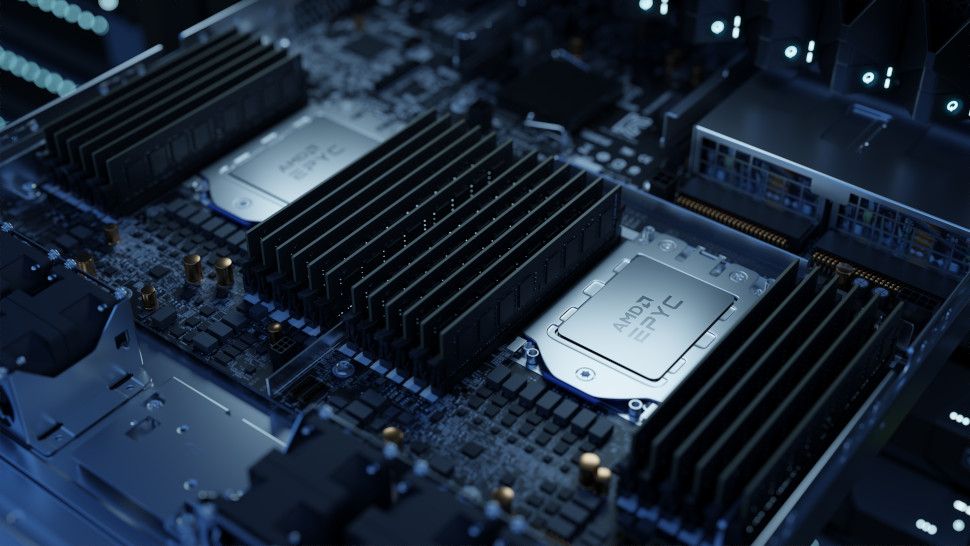

AMD deployed a superheavyweight 64-core CPU this year aimed at professional users. The Ryzen Threadripper Pro 5995WX (backed by the 32-core 5975WX, and 24-core 5965WX) faced no opposition from Intel, though, which hasn’t released a high-end desktop (HEDT) range in years (but that should change in 2023, mind you, if rumors are right).

Meanwhile, the 5995WX strutted as an unchallenged champion (and will have a successor next year, as well), but the bad news on the AMD front is that there were no non-Pro Threadripper models – and won’t be in the future either.

As these Pro categorized chips are ridiculously expensive, that puts Threadripper out of the pricing reach of all but the richest of PC enthusiasts. That said, as December rolled to a close, a rumor was floated suggesting maybe AMD will bring out non-Pro chips with the next generation of Threadripper (but take that with very heavy seasoning).

Server success

AMD forged ahead in the server market during 2022, with its Q3 earnings report showing that the firm’s data center business boomed to the tune of 45% compared to the previous year. AMD moved up to hold 17.5% of the server market, grabbing more territory from Intel, and experiencing its fourteenth consecutive quarter of growth in this area, no less.

AMD has new fourth-generation EPYC chips to come in 2023, including Genoa-X processors utilizing 3D V-Cache tech to offer in excess of 1GB of L3 cache per socket (a larger amount than any current x86 chip). It’s no wonder Intel has forecast a difficult time for itself in the server sphere in the nearer future.

Concluding thoughts

AMD had a pretty good year with both its new CPU and GPU ranges, but not without problems – a lot of which were to do with the heat of the competition. While Ryzen 7000 provided a very impressive generational boost, the Zen 4 chips struggled on the cost front against Intel Raptor Lake (and the relative affordability of Team Blue’s platform in particular).

AMD wasn’t afraid to take action, though, in terms of some eye-opening early doors price cuts for Ryzen 7000 processors. And in the (likely very near) future, fresh X3D models based on Zen 4 hold a whole heap of promise – not to mention cheaper AM5 motherboards to make upgrading to AMD’s new platform a more affordable affair.

As for graphics cards, the Radeon RX 7900 XTX made quite a splash, and on the whole, RDNA 3 kicked off well enough. Even if the launch felt rushed, with question marks around the drivers perhaps needing work, and the 7900 XT failed to be quite such a hit as the XTX.

There’s no doubting that Nvidia clearly held the performance crown in 2022, however, with the RTX 4090 well ahead of the 7900 XTX in most respects. (Driver improvements for RDNA 3 could be key in playing catch-up for AMD, though, as we discussed above).

All that said, arguments about the relative merits of these high-end graphics cards from both Nvidia Lovelace and AMD RX 7000 are not nearly as important as what comes in the mid-range. That’s going to be the real GPU battleground – outside of these initial top-end boards that cost more than most people pay for an entire PC – and we’re really excited to see how these ranges stack up against each other.

And we’re praying that the budget end of the GPU spectrum will actually get some peppier offerings from AMD (and Nvidia), otherwise Intel might well take advantage with low-cost Arc cards next year.

from TechRadar: computing components news https://ift.tt/x6aJt8v

via IFTTT