We’re almost done with 2023, and as ever at TechRadar, it’s time to look back at how the various tech giants performed over the past year. In AMD’s case, we saw some inspiring new products introduced for its consumer processor and GPU ranges, and renewed gusto in its pursuit of AI.

There were also shakier times for Team Red, though, notably a string of blunders – the vapor chamber cooling debacle is one that springs immediately to mind, but there were other incidents, and a few too many of them. Join us as we explore the ups and downs of AMD’s 2023, weighing everything up at the end.

Zen 4 gets 3D V-Cache

One of AMD’s big moves this year was the introduction of 3D V-Cache for AMD’s Ryzen 7000 desktop processors.

There were a trio of X3D models introduced, with the higher-end Ryzen 9 7950X3D and 7900X3D hitting the shelves first in February. These were good CPUs and we liked them, particularly the Ryzen 9 7950X3D, which is a sterling processor, albeit very pricey (similarly, we felt the price of the 7900X3D held it back somewhat).

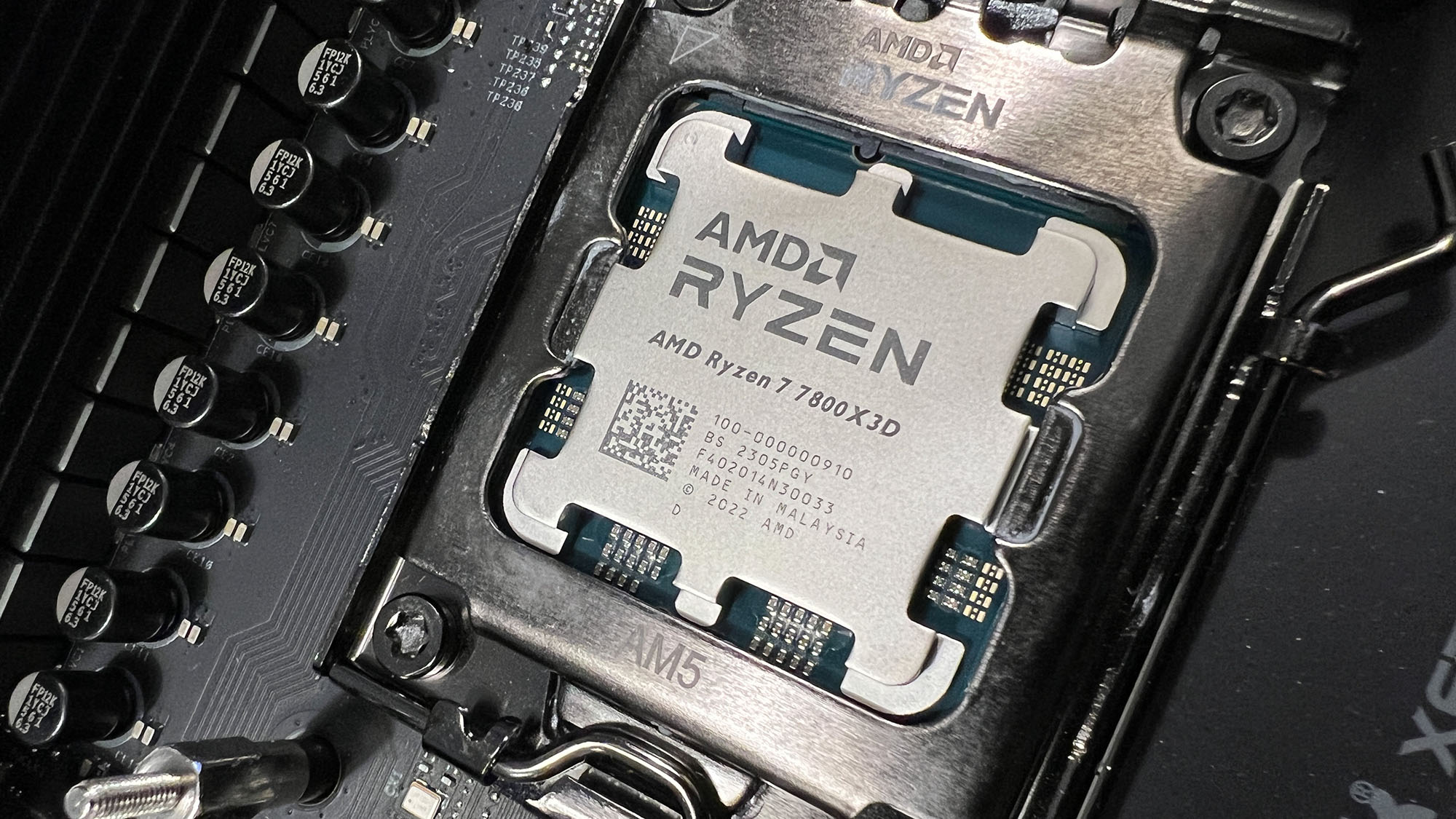

What everyone was really waiting for, though, was the more affordable mid-range 3D V-Cache chip, and the Ryzen 7 7800X3D turned up in April. We praised the 7800X3D’s outstanding gaming performance and it’s the best choice for a gaming PC as we conclude in our roundup of the best processors. This was a definite highlight in AMD’s releases this year.

We were also treated to an interesting diversion in the form of a new last-gen X3D processor which AMD chose a very different tactic for. The Ryzen 5 5600X3D arrived in July as a cheap CPU that’s great for an affordable gaming PC, the catch being that it only went on sale through Micro Center stores in the US. For those who couldn’t get that, though, there was always the old Ryzen 5800X3D which dipped to some really low price tags at various points throughout the year. For gamers, AMD had some tempting pricing, that’s for sure.

Away from the world of 3D V-Cache, AMD also pushed out a few vanilla Ryzen 7000 CPUs right at the start of the year, namely the Ryzen 9 7900, Ryzen 7 7700, and Ryzen 5 7600, the siblings of the already released ‘X’ versions of these processors. They were useful choices to be thrown into the mix offering a bit more affordability for the Zen 4 range.

RDNA 3 arrives for real

AMD unleashed its RDNA 3 graphics cards right at the close of 2022, but only the top-tier models, the Radeon RX 7900 series. And the RX 7900 XTX and 7900 XT were all we had until 2023 was surprisingly far along – it wasn’t until May that the RX 7600 pitched up at the other end of the GPU spectrum.

The RX 7600 very much did its job as a wallet-friendly graphics card, mind you, and this GPU seriously impressed us with its outstanding performance at 1080p and excellent value proposition overall. Indeed, the RX 7600 claimed the title of our best cheap graphics card for this year, quite an achievement, beating out Nvidia’s RTX 4060.

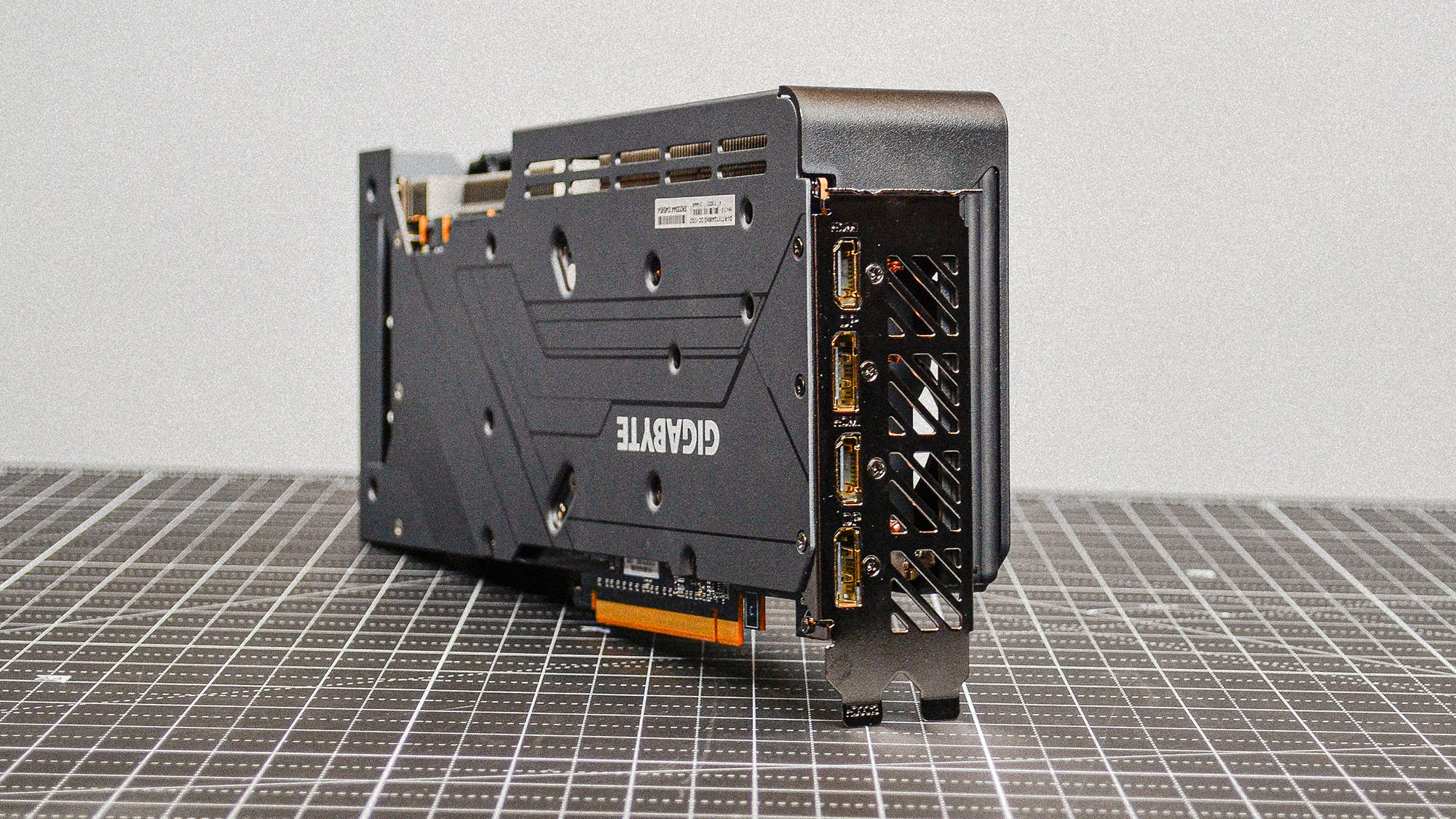

Then we had another sizeable pause – which witnessed gamers getting rather impatient – for the gap, or rather gulf, to be filled in between the RX 7600 and RX 7900 models. Enter stage left the RX 7800 XT and the 7700 XT as mid-range contenders in September, one of which really punched its weight.

That was the RX 7800 XT and even though it only marginally outdid its predecessor for pure performance, this new RDNA 3 mid-ranger did so well in terms of its price/performance ratio versus its RTX 4070 rival that the AMD GPU scooped the coveted top spot in our best graphics card roundup. (Deposing the RTX 4070, in fact, which had held the number one position since its release six months prior).

As for the RX 7700 XT, that was rather overshadowed by its bigger mid-range sibling here, not making as much sense value-wise as the 7800 XT.

Still, the long and short of it is that AMD bagged both the title of the best GPU for this year, as well as the best budget offering – not too shabby indeed.

From what we saw of sales reports – anecdotally and via the rumor mill – these new desktop graphics cards pepped up AMD’s sales a good deal. While the RX 7900 series GPUs were struggling against Nvidia early in 2023, towards the end of the year, the 7800 XT in particular was really shifting a lot of units (more than the RTX 4070).

While Nvidia is still the dominant desktop GPU power by far, it’s a sure bet AMD regained some turf with these popular RDNA 3 launches in 2023.

FSR 3 finally turns up

We did a fair bit of waiting for stuff from AMD this year as already observed, and another item to add to the list where patience was definitely required was FSR 3.

FSR is, of course, AMD’s rival to DLSS for boosting frame rates in games, and more specifically, FSR 3 was Team Red’s response to DLSS 3 that uses frame generation technology (inserting extra frames into the game to artificially boost the frame rate).

FSR 3 was actually announced in November 2022 – as we covered in our roundup of AMD’s highlights for last year – and we predicted back then that it wouldn’t turn up for ages.

Indeed, it didn’t, and we heard nothing about FSR 3, save for a small info drop for game developers in March, for most of 2023. Then finally, at the end of September, AMD officially released FSR 3.

However, it wasn’t a simple case of that’s that and AMD was level-pegging with Nvidia suddenly. For starters, Nvidia went ahead and pushed out DLSS 3.5 (featuring ray reconstruction), and frankly, AMD’s frame generation feature was quite some way behind Team Green’s in its initial incarnation. It was also not nearly as widely supported – and remains so – with adoption moving at a sluggish pace, and only four games available that make use of FSR 3 so far.

But at least it’s here, and AMD made another important move in December, as the year drew to a close, releasing an improved version of FSR 3. We saw with Avatar: Frontiers of Pandora – the third game to introduce support – that the new version of FSR (3.0.3) runs a good deal more slickly, at least according to some reports.

On top of this, AMD also made FSR 3 open source. That means more games should be supported soon enough (and modders can, and already have, started introducing FSR 3 to some titles, but unofficial support will never be quite the same as the developer implementing the tech).

Furthermore, in terms of better support for games, Team Red did make another move at the same time as FSR 3. We’re talking about AMD’s Fluid Motion Frame (AFMF) tech which as well as being part of FSR 3 is integrated separately at a driver level.

This allows for frame generation boosts to be applied to all games – via the driver, with no need for the game to be coded to support it – with the caveat being that it only works with RX 7000 and 6000 GPUs. Now that’s great, but note that what you’re getting here is a ‘lite’ version of the frame generation process applied in FSR.

As 2023 now comes to a close, AFMF is still in preview (testing) and somewhat wonky, though Team Red has improved the tech a fair bit since launch, much like FSR 3.

In short, it looks like AMD is getting there, and also ushering in innovations such as anti-lag+ (for reducing input latency, with RX 7000 and supported games only, although this has had its own issues). Not to mention the company is wrapping up all this tech in HYPR-RX, an easy-to-use one-click tuning mode that’ll apply relevant (supported) features to make a given game perform optimally (hopefully).

But there’s still that inevitable feeling of following in Nvidia’s wake when it comes to FSR and related features, with AMD rather struggling to keep up with the good ship Jensen.

Still, AMD appears to have an overarching vision it’s making solid, if somewhat slow, progress towards, but we certainly need to see more games that (officially) support FSR 3 – with an implementation impressive enough to equal DLSS 3 (or get close to it).

Portable goodness

This year saw some interesting launches from AMD on the portable device front, not the least of which was the Ryzen Z1 APU. Built on Zen 4, this mobile processor emerged in April to be the engine that several gaming handhelds were built around, notably the Asus ROG Ally and Lenovo Legion Go.

There were two versions of the Z1, the 6-core vanilla chip, and a Z1 Extreme variant which was an 8-core CPU but crucially had a lot more graphics grunt (12 RDNA 3 CUs, rather than just 4 CUs for the baseline processor). The Z1 Extreme proved to be an immense boon to these Windows-powered gaming handhelds, driving the Legion Go to become what we called the true Steam Deck rival in our review.

The weakness of those Windows-toting Steam Deck rivals is, of course, the battery life trade-off (particularly when driving demanding games at more taxing settings). AMD was on hand to help here, too, introducing HYPR-RX Eco profiles to its graphics driver late in the year, which should offer a convenient way to tap into considerable power-savings (without too much performance trade-off – we hope).

Away from handhelds, in December we were also treated to the launch of a range of Ryzen 8000 CPUs for laptops. These ‘Hawk Point’ chips aren’t out yet, but will debut in notebooks in early 2024, although note that they’re Zen 4-based (the same as Ryzen 7000 silicon).

The line-up is led by the flagship Ryzen 9 8945HS, an 8-core processor with integrated graphics (Radeon 780M) that’ll be great for 1080p gaming (with some details toned down, mind). These chips will also benefit from AMD’s XDNA NPU (Neural Processing Unit) for accelerating AI tasks, and Team Red asserted that Hawk Point chips will be 1.4x faster than the Ryzen 7040 series in generative AI workloads – a pretty tasty upgrade.

AI bandwagon

Those Hawk Point mobile CPUs showed AMD’s growing focus on AI, and this was a broader push for Team Red throughout the year, which comes as no surprise – everyone who was anyone in tech, after all, was investing in artificial intelligence. Moreover, Nvidia made an absolute fortune in the AI space this year, and obviously that didn’t go unnoticed at AMD towers.

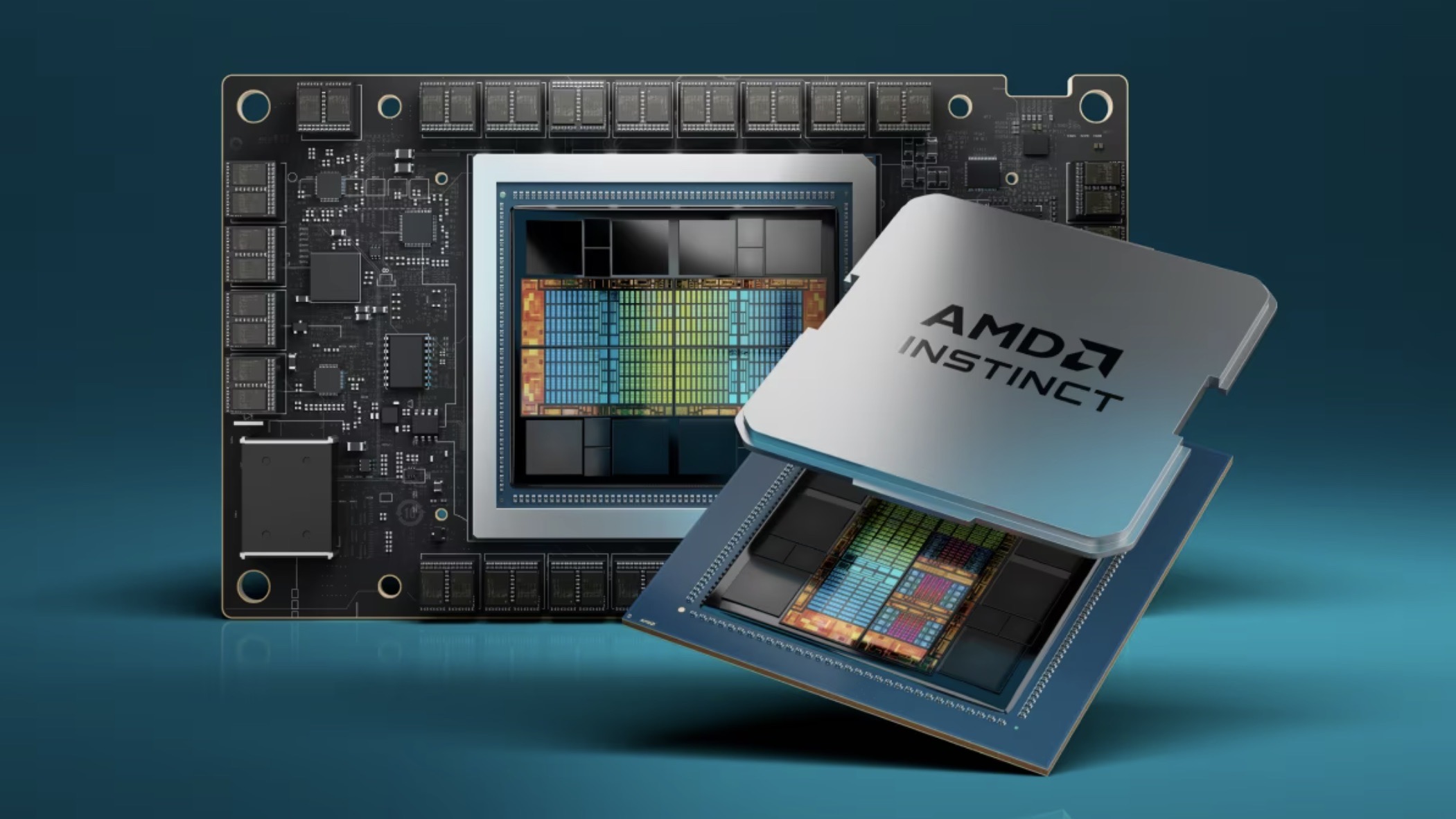

As well as incorporating heftier NPUs in its processors, in May AMD tapped Microsoft for resources and cash to help develop AI chips (for the gain of both companies). But the real power move for Team Red came late in the year, when in December AMD revealed a Zen 4 APU for AI applications (the largest chip it has ever made, in fact, bristling with 153 billion transistors).

The Instinct MI300A is loaded with 24 CPU cores plus a GPU with 228 CDNA 3 CUs and eight stacks of HBM3 memory, posing a genuine threat to Nvidia’s AI dominance. AMD’s testing indicates that the MI300A is about on par with Nvidia’s mighty H100 for AI performance, and as the year ended, we heard that firms like Microsoft and Meta are interested in adopting the tech.

AMD said that the Instinct MI300A will be priced competitively to poach customers from Nvidia, as you might expect, while acknowledging that Team Green will of course remain dominant in this space in the near future. However, Lisa Su intends for her firm to take a “nice piece” of a huge AI market going forward, and if the MI300A is anything to go by, we don’t doubt it.

Year of the gremlins

While AMD had plenty of success stories in 2023, as we’ve seen, there were also lots of things that went wrong. Little things, medium-sized things, and great hulking gremlins crawling around in the works and making life difficult – or even miserable – for the owners of some AMD products who got unlucky.

Indeed, AMD was dogged by lots of issues early in the year, most notably a serious misstep with the cooling (vapor chamber) for RX 7900 XTX graphics cards. Although the flaw only affected a small percentage of reference boards, it’s absolutely one of the biggest GPU blunders we can recall in recent years. (Nvidia’s melting cables with the RTX 4090 being another obvious one).

We also witnessed a worrying flaw with AMD’s Ryzen 7000 CPUs randomly burning out in certain overclocking scenarios. Ouch, in a word.

Other examples of AMD’s woes this year include a graphics driver update in March bricking Windows installations (admittedly in rare cases, but still, this is a nasty thing to happen off the back of a simple Adrenalin driver update), and other driver bugs besides (causing freezing or crashing). And we also saw AMD chips that had security flaws of one kind or another, some more worrying than others.

Not to mention RX 7000 graphics cards consuming far too much power when idling in some PC setups (multiple monitors, or high refresh rate screens – a problem not resolved until near the end of the year, in fact).

There were other hitches besides, but you get the idea – 2023 was a less than ideal time for AMD in terms of gaffes and failures of various natures.

Concluding thoughts

Clearly, AMD tried the patience of gamers in some respects this year. First of all with those glaring assorted blunders which doubtless proved a source of frustration for some owners of their products. And secondly, purely due to making gamers wait an excessively lengthy time for features like FSR 3 – which seemed to take an age to come through – and ditto for filling out the rest of the RDNA 3 range, as those graphics cards took quite some time to arrive.

However, the latter were very much worth the wait. The double whammy for GPUs was a real coup for AMD, releasing the top budget graphics card in the RX 7600, and our favorite GPU of them all, the reigning RX 7800 XT that sits atop our ranking of the top boards available right now.

There were plenty of other highlights, such as releasing the best gaming CPU ever made – in the form of the Ryzen 7 7800X3D – which was a pretty sharp move this year. We also received a top-notch mobile APU for handhelds in the Ryzen Z1 Extreme.

AMD’s GPU sales were appropriately stoked as 2023 rolled on, and FSR – plus other related game boosting tech – seems to be coming together finally, albeit in an overly slow but steady manner as mentioned. In the field of AI, Team Red is suitably ramping up its CPUs, and with the Instinct MI300A accelerator it’s providing a meaningful challenge to Nvidia’s dominance.

In short, despite some worrying wobbles, 2023 was a good year for AMD. The future looks pretty rosy, too, certainly with next-gen Zen 5 processors that look set to get the drop on Intel’s Arrow Lake silicon next year. And some even more tantalizing Zen 5 laptop chips (‘Strix Point’ – sitting above Hawk Point, and sporting XDNA 2 and RDNA 3.5) are inbound for 2024.

Next-gen Radeon GPUs are a little sketchier – RDNA 4 is coming next year, but the range may top out at mid-tier products, as AMD refocuses more on AI graphics cards (as expected in terms of going where the profits are). Those RDNA 4 cards could still pack a value punch, though, and looking at the current mid-range champ, the RX 7800 XT, we’d be shocked if they didn’t.

from TechRadar: computing components news https://ift.tt/wazVhKe

via IFTTT