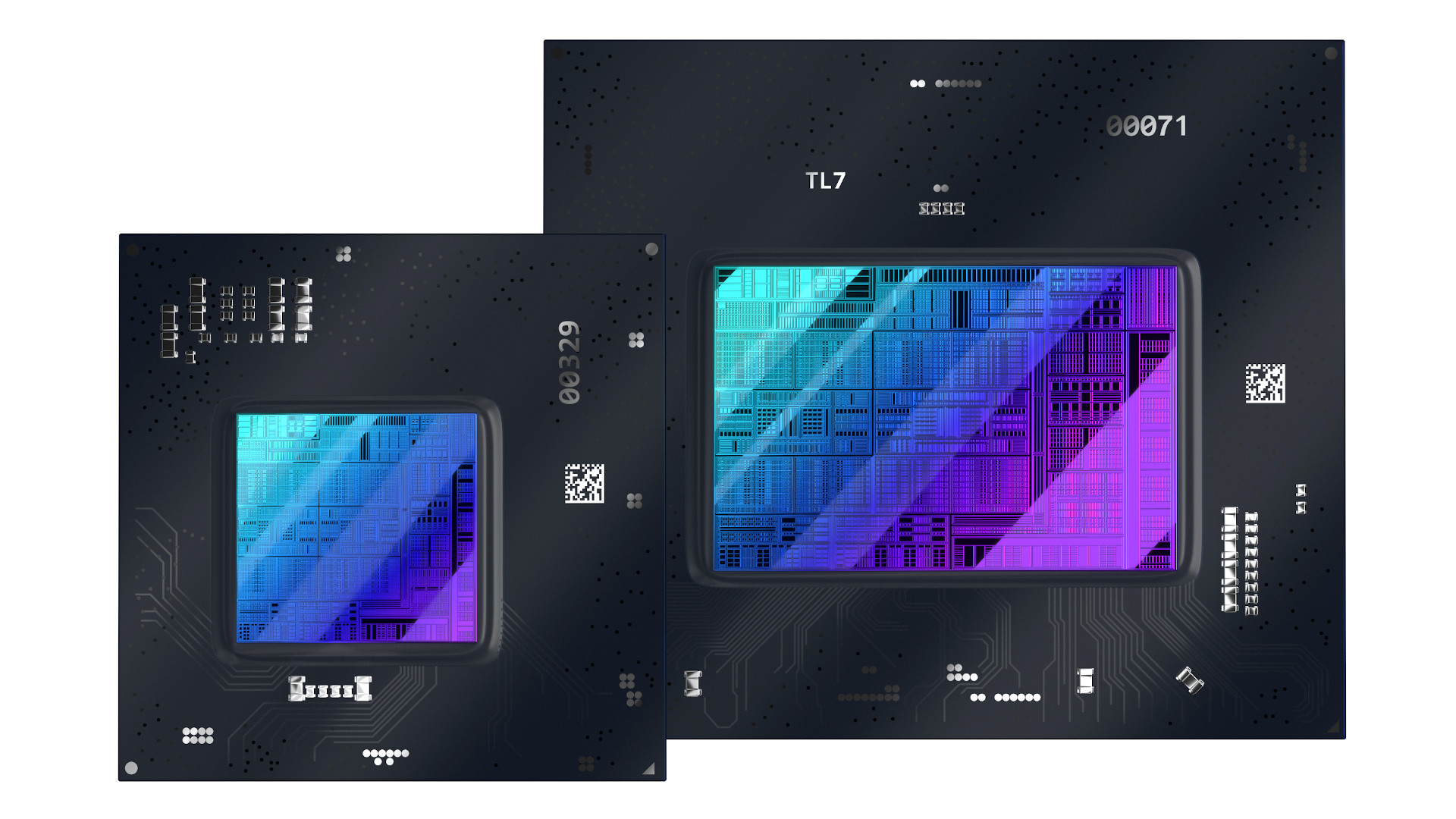

Intel has revealed the first of its Arc Alchemist graphics cards, and as we already knew, these initial offerings are laptop class GPUs.

Intel announced three classes of GPUs, lower-end Arc 3 mobile GPUs, Arc 5 (midrange) GPU, along with an Arc 7 for high-performance gaming laptops. This means Intel is going to finally be able to power legitimate gaming products, which will put it head to head with gaming laptops powered by Nvidia and AMD.

Intel broadly claims that Arc 3 offers up to double the performance of Intel Iris Xe integrated graphics, and the benchmarks bear this out in some cases, with generally sizeable gains across the board (in rarer worst-scenario cases, such as Rocket League, the A370M looks to be only around 20% faster, which is still a noticeable boost of course).

However, Intel didn't talk about how performance would look against competing systems powered by Nvidia GeForce or AMD Radeon graphics, so it'll be interesting to see which laptop chips land on top.

Luckily, we won't have to wait long – Intel Arc 3 GPUs will be available in gaming laptops starting March 30, and the Arc 5 and Arc 7 graphics will start appearing later in 2022.

What about specs?

The Arc 3 GPUs are the A350M and A370M, entry-level products with 6 and 8 Xe-cores respectively. Both have 4GB of GDDR6 VRAM on board with a 64-bit memory bus, with the A350M sporting a clock speed of 1150MHz and the A370M upping that considerably to 1550MHz. Power consumption is 25W to 35W for the A350M, and 35W to 50W for the A370M.

As for the mid-range Arc 5, you get the A550M which runs with 16 Xe-cores clocked at 900MHz, doubling up the VRAM to 8GB (and widening the memory bus to 128-bit). Power will sit at between 60W and 80W for this GPU.

Finally, the high-end chips are the A730M and A770M which bristle with 24 and 32 Xe-cores respectively. The lesser A730M is clocked at 1100MHz and has 12GB of GDDR6 VRAM with a 192-bit bus, and power usage of 80W to 120W.

Intel has clocked the mobile flagship A770M at 1650MHz and this GPU has 16GB of video RAM with a 256-bit bus. Power consumption is 120W to 150W maximum.

Never mind the raw specs, you may well be saying at this point: what about actual performance? Well, Intel does provide some internal benchmarking, but only for the Arc 3 GPUs.

The A370M is pitched as providing ‘competitive frame rates’ for gaming at 1080p resolution, exceeding 90 frames per second (fps) in Fortnite (where the GPU hits 94 fps at medium details), GTA V (105 fps, medium details), Rocket League (105 fps, high details) and Valorant (115 fps, high details).

Intel provides further game benchmarks showing over 60 fps performance in Hitman 3 (62 fps, medium details), Doom Eternal (63 fps, high details), Destiny 2 (66 fps, medium details) and Wolfenstein: Youngblood (78 fps, medium details).

All of those benchmarks are taken with the A370M running in conjunction with an Intel Core i7-12700H processor, and comparisons are provided to Intel’s Iris Xe integrated GPU in a Core i7-1280P CPU.

Analysis: We can’t wait to see the rest of Intel’s alchemy

The first laptops with Arc 3 GPUs are supposedly available now – we’d previously heard from Intel that they’d be out on launch day, or the day after – and the one Intel highlights is the Samsung Galaxy Book2 Pro.

Hopefully, there should be a good deal of models out there soon enough – from all major laptop makers, as you’d expect – featuring Arc 3 graphics, which will happily slot into ultra-thins like the Galaxy Book2 Pro, providing what looks like pretty solid 1080p gaming performance (running the likes of Doom Eternal on high details in excess of 60 fps). The pricing of these laptops is set to start from $899 (around £680, AU$1,200), Intel notes.

It’s a shame we didn’t get any indication of how the mid-range Arc 5 – which is something of an oddity with its base clock dipping right down to 900MHz – and high-end Arc 7 products will perform, but then they don’t launch for a few months yet. What Intel can pull off here will tell us much more about how Arc will pan out in this first generation, and how the much-awaited desktop graphics cards – also expected to land in Q2 – will challenge AMD and Nvidia in gaming PCs.

Also of note is that during this launch, Intel let us know that XeSS, its AI super sampling tech (to rival Nvidia DLSS, and AMD FSR) won’t debut with these first mobile GPUs, but rather will arrive in the summer with the big gun Arc GPUs. Over 20 games will be supported initially.

from TechRadar: computing components news https://ift.tt/EtQdYc6

via IFTTT